In this fifth article in G&T’s series on Artificial Intelligence (AI) in construction, we consider how to plan and prepare for AI-based technologies.

The article outlines the phases a typical AI project might follow, with a particular focus on what steps need to be taken prior to the deployment of AI solutions (addressing issues such as data discontinuity, inconsistency and inaccuracy). Finally, we look at what one London-based tech start-up is doing to de-risk construction programme schedules using Deep Learning AI models.

Once determined that AI solutions can meet specific needs – whether these be automating repetitive tasks, improving quality control or reducing project timelines – there are three high-level phases that form part of any project. These are:

- Discovery: Understanding the problem that needs to be solved

- Alpha phase: Building and evaluating your AI/ML model

- Beta phase: Deploying and maintaining the model

The Discovery Phase: Understanding the Problem

The discovery phase tends to be the longest. Time is needed to understand what problems need to be solved and how feasible it would be to use your current data sources (which may not be in the best condition) in a new way. An assessment of the quality[1] of the data is key, as training an algorithm on erroneous data will yield poor results. The predictive capability of the model will be compromised if the datasets don’t contain clear or accurate patterns.

Assessing your data and understanding its limitations is best done by a data scientist – someone that is familiar with measuring, cleaning and maintaining data. They will assess the quantity, accuracy and frequency of the data, looking closely at its sources to ensure data is reliable/impartial and will be truly representative. Data scientists will also explore what modelling approaches could be suitable for the data that is available, factoring in the location, format and accessibility of the data.

A multidisciplinary team – comprising specialists such as data architects, data engineers, data ethicists and, importantly, a domain expert familiar with the various aspects of the construction process – will yield the best results. The best teams will have experience of solving AI problems similar to the one you’re trying to solve, but also have broader familiarity with a wide range of machine learning techniques and algorithms and experience of deploying these at scale. There are various additional technical requirements important for any AI team to have such as an understanding of cloud architecture and cloud platforms, computer science and statistics, software development, backend systems, data storage and data bias removal – but a detailed exploration of these are beyond the scope of this article.

AI specialists will be able to manage the infrastructure – ie the data collection pipeline, database storage, how to mine and analyse the data for insights – and advise on what platforms/software tools could be used by teams to collate the technology across their project workflow. The best data science platforms will be fast, transparent and secure, allowing teams to:

- Build workflows for accessing/preparing datasets allowing for easy maintenance of the data

- Provide a common environment that enables sharing of data/code so teams can work collaboratively

- Allow for the easy sharing of output through dashboard applications

- Allow for project-specific or sensitive permissions to be controlled and monitored

The remainder of the discovery phase requires consideration of data quality and bias. The data will need to be diverse and reflective of the thing you are trying to model. Data in legacy systems may contain bias and have poor controls around it and so may not be compatible with AI technology. If this is the case, you may need to invest time and money to bring legacy systems up to modern standards.

Ensuring your system can keep data secure by following the National Cyber Security Centre’s (NCSC) guidance on using data with AI, as well as ensuring compliance with GDPR[2] and DPA 2018[3] also needs to be done in the discovery stage.

By the end of the discovery/data preparation stage, you should have:

- A clean dataset ready for modelling in a technical environment

- A list of features or measurable properties that will be generated from raw data sets

- A data quality assessment[4]

- A cost-benefit analysis to determine whether the service is viable and can solve user needs

Alpha Phase: Building and Evaluating

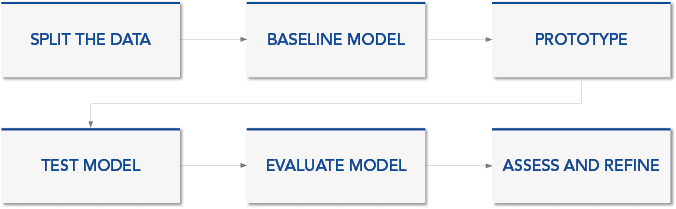

This is the AI prototype phase that will typically comprise the following steps:

Exploring each step of the alpha phase is beyond the scope of this article but in high-level terms the data will need to be split into different sets to train the AI models. A simple baseline model will need to be built to identify problems and act as a benchmark to compare more complex models against.

Once a baseline model has been established, more complex models can be prototyped through iterating. Lots of data will be used to mould several models before deciding on the most effective to solve the problem. These models will need to be tested to determine effectiveness and help identify which model provides the most accurate impression of how the model will perform once deployed.

Having selected your best model, this will need to be evaluated using unseen data to assess how it performs in the real world and then fine-tuned. Consideration is given to performance against simpler models, whether the model shows any signs of bias and if it can outperform human performance. With a final model, a decision can be made on whether to progress on the beta phase.

Beta Phase: Deploying and Maintaining

Essentially, the beta phase involves integrating the model into the decision-making process, running on top of live data to make predictions, interpretations and provide insights generated by the model. The three beta phase stages are:

- Model integration – performance test with live data and integrate/deploy within the decision-making workflow

- Evaluate the model – this needs to be continuous to ensure objectives are being met and the model is performing/whether it needs to be retrained

- Help users – train users to develop and maintain the system, ensuring they are confident in using, interpreting and challenging any outputs or insights generated by the model

This phase may require different skillsets to the alpha phase (which is more about assessing the opportunity and state of your current data sets). The beta phase may need professionals with experience in dev-ops, servers, networking, data stores, data management, data governance, containers, cloud infrastructure and security design. These skill sets may be better suited to an engineer rather than a data scientist but maintaining a cross-functional team will allow a smooth transition from alpha to beta.

After creating the beta version, AI should be running on top of the data, learning and improving its performance. However, you will need to continually monitor and evaluate the performance of the AI system once implemented, using monitoring frameworks to identify any incidents. This will help identify any issues and fine-tune the system to achieve optimal performance.

Addressing issues such as data discontinuity, inconsistency and inaccuracy

Good data is a key enabler to unlocking the benefits of AI. Data is the raw material of AI and therefore limited, inconsistent or inaccurate data sets will invariably impact the usefulness of AI and stunt its growth.

There is no shortage of data in the construction sector – massive amounts are being generated daily as every project and job site becomes a potential source for AI. However, the data is often ‘dirty’ (ie inaccurate, incomplete or inconsistent data) and unstructured (ie doesn’t neatly fit into a database/spreadsheet in neat rows and columns). Fortunately, there are tools and platforms that can now deal with unstructured data, but dirty data is an ongoing challenge.

Dirty data can negatively affect the performance of machine learning models. Dealing with dirty data is an essential part of data pre-processing and data cleaning. There are several strategies to handle it:

- Data Cleaning: The first step in dealing with dirty data is to clean the data, which involves identifying and correcting errors, missing values and inconsistencies in the data. This process can encompass a range of techniques, including data imputation, filtering or normalisation.

- Feature Engineering: ie the process of creating new features or modifying existing ones to improve the performance of machine learning models. By transforming or combining existing features, you can help models detect patterns that would be difficult to recognise otherwise.

- Outlier Detection: Outliers are data points that are significantly different from the other data points in the dataset. Detecting and removing outliers improves the accuracy of machine learning models, as outliers can cause the models to overfit or underfit the data.

- Regularisation: Regularisation is a technique that helps reduce overfitting[5] in machine learning models. By adding a penalty to the model's objective function, regularisation encourages the model to choose simpler, more generalisable solutions that are less sensitive to noise or outliers in the data.

- Data Augmentation: Data augmentation involves creating new data from the existing data by applying transformations such as rotations, translations, or scaling. This technique can help increase the diversity of the dataset and reduce the impact of dirty data.

It is important to carefully analyse the data and choose the appropriate techniques to clean and pre-process the data before training machine learning models to achieve optimal performance.

Data Discontinuity

Falling under the banner of ‘dirty data’ is the issue of data discontinuity. Data discontinuity refers to a situation where the relationship between input data and the output predictions or decisions made by a machine learning model changes abruptly, often due to changes in the distribution or nature of the input data.

Data discontinuity, which can occur for a variety of reasons (such as missing or corrupted data, changes in the measurement or sampling methods used to collect the data, or variations in the data due to differences in the sensors or by adopting other data collection methods) can be particularly challenging for machine learning models. This is because they rely on patterns in the data to make predictions or decisions. When faced with data that is significantly different from the training data, a model may struggle to make accurate predictions or decisions, leading to performance degradation or failure.

Addressing data discontinuity often requires developing techniques to adapt models (ie the algorithms) to new data distributions or to recognise when the data has changed significantly and either retrain or update the model accordingly. This is an ongoing challenge in AI research and development, as it is difficult to anticipate all the ways in which data may change over time or across different environments.

nPlan: A Case Study

nPlan is currently being used by major owners/operators and contractors. Names such as Network Rail, Shell, HS2, Office of Projects Victoria, Kier and Laing O'Rourke all regularly use the technology to inform their decision-making and improve ROI.

Towards the end of 2022, G&T had the opportunity to learn more about nPlan – a construction management software provider that uses historical project data to forecast outcomes of construction projects, helping them to be built on time and on budget.

The provider offers data-derived schedule of risk forecasting by using past project schedules and machine learning, to find out how long a project might take, what could go wrong and what can be done to reduce programme schedule risks and capture opportunities.

nPlan’s deep learning algorithm understands schedule data that can be used to test certain assumptions going into a project. Its unbiased system is entirely reliant on data – not opinion – so removes optimal belief bias as projects move through their stage gates. nPlan’s quantitative scheduling risk analysis (QSRA) system can also remove recency and availability bias (you only know what you know – not what the whole world knows) which can be hard to transpose on risk plans. Saliency bias, which occurs when we focus on items or information that are especially remarkable while casting aside those that lack prominence, is also picked up by nPlan’s algorithm.

According to nPlan, it’s often the more mundane or boring elements that go wrong on projects (eg reviewing drawings) rather than the bigger, more exciting activities. Subjectivity in assessing risk is difficult to avoid and if representatives on the project team just had a major issue occur on another project, it can steer the view that such an event could happen again. nPlan shortcuts this system, with its software able to tell us which project milestones have a likelihood of delay. However, it would be up to project stakeholders to determine how much of an impact this could have on other aspects of the construction process. Essentially, nPlan’s algorithm tells us where to fish - humans still need do the actual fishing.

If an activity is expected to be delayed, the algorithm cannot tell us why or where the forecasted delay came from. It does not know the causality of the delay – only the effect of the delay based on historical schedule data. nPlan’s software works by providing a probability distribution of an extension occurring rather than giving an accurate prediction. The algorithm may suggest that you haven’t considered risk in a certain area and that this could potentially blow out if not addressed. nPlan can also generate intervention recommendations (or IRs), suggesting that if the project team intervened on a specific part of the project, this is how the risk may change.

Knowing the probability of a project being extended allows stakeholders to then simplify the project and/or remove some of its quirks, as necessary. It also allows them to establish time contingencies as well as help remove any unnecessary individuals on projects, producing a cost saving.

From an investment perspective, quantifying risk in a systemic way introduces trust, leading to improved project outcomes and instilling a greater degree of confidence in investors. Project overrun and overspend are often a result of human bias. According to nPlan, high-quality data can replace these incorrect assumptions and help de-bias projects.

nPlan’s current focus is on project scheduling, risk forecasting and contingency management rather than cost modelling. nPlan does not currently intend to start cost modelling as this data is not embedded within the schedule file. Furthermore, construction costs are usually “all over the shop” and are often in different formats.

Conclusion

Artificial Intelligence is already transforming the construction industry and companies that are proactive in adopting AI technologies will gain a competitive advantage through increased productivity and efficiency.

Nevertheless, applying AI on construction projects can be challenging. Concerns over the quality of data for algorithm training and non-standardisation of projects (and therefore datasets) appears to be one of the main hurdles, while the fragmented nature of the industry resulting in data acquisition problems is another. For smaller firms, the high initial costs (and ongoing maintenance costs) will dissuade many from taking up AI technologies on affordability grounds. Even larger firms, that can afford the considerable financial commitment for R&D and application purposes, may determine after a cost-benefit analysis that the cost savings AI may bring to a project are not feasible. However, as AI in construction continues to expand and becomes more prevalent, implementation costs are likely to become more affordable for businesses.

After identifying the areas where AI can be applied, these larger/better resourced construction companies with substantial datasets will need to assess the opportunity and quality of their data in the Discovery Phase of an AI project. Understanding the limitations of the data in this phase will require an experienced and multidisciplinary team. Such a team will be able to determine how feasible it would be to use your current data sources (which may not be in the best condition), as training an algorithm on erroneous or ‘dirty’ data will yield poor results and compromise the predictive capability of any model that is built. They will also be able remove various categories of bias from the system and ensure the model is truly representative.

Despite the issues with dirty data and other adoption challenges mentioned in this article, the landscape of AI in construction is constantly changing and many speculate that the opportunities will far outweigh the challenges in the long run when AI technologies are mature. The construction industry will increasingly see AI as a major driver of change to improve efficiency, productivity, work processes, accuracy, consistency, and reliability in the long run.

[1] ‘Data quality’ refers to a combination of accuracy, completeness, uniqueness, timeliness, validity, relevancy, representativeness, sufficiency or consistency

[3] https://www.gov.uk/government/collections/data-protection-act-2018

[4] Using a using a combination of accuracy, bias, completeness, uniqueness, timeliness/currency, validity or consistency

[5] Overfitting happens when a model learns the patterns, noise and random fluctuations in the training data to the extent that it negatively impacts the performance of the model on new data. This means that the noise or random fluctuations in the training data is picked up and learned as concepts by the model. The problem is that these concepts do not apply to new data/unseen scenarios and negatively impact the model’s ability to generalise.